What if AI could write like you?

Imagine this:

You’re thinking of a new idea for your newsletter to write about.

You can’t think of anything…

But wait, you have an AI model that can spit out newsletter drafts that could have been written by you!

Would be sick if you could do that, right?

Guess what?

It’s possible.

You can train an AI model to write like you.

“But how, Michael?”

That’s what I’m going to show you today.

Before jumping into the process, let’s set some context:

My goals for this process.

How does the training work?

Resources needed to follow along with this process.

Setting Context

My Goal Going Into This

I write a weekly newsletter that goes out every Saturday.

I also:

make YouTube videos

write Tweets & LinkedIn posts

craft resources for educating my audience

It can be a lot.

Sometimes, the ideation process of a newsletter takes longer than I’d like.

I want a way to ask an AI model to “write up a newsletter about X topic” and it be written in my writing style from get-go.

This will help me:

iterate on ideas more efficiently

start writing new drafts faster

So that is my goal—to be more efficient in the drafting process of my newsletters.

Now let’s talk about how the training actually works.

How Does the Training Work?

The way we train an AI (also known as a Large Language Model or “LLM”) is through a process called fine-tuning.

In my process, I fine-tuned the new Llama 3 model released by Meta.

The fine-tuning is carried out by further training the model on a task-specific dataset.

This allows the model to adapt and improve its capabilities for that particular task.

Say Llama 3 was initially trained on a broad range of text data.

When fine-tuning it on medical texts would make it more adept at understanding and generating language related to the medical field.

For my process, I used my newsletters as the fine-tuning dataset.

Resources Needed for This Process:

Google Collab Notebook by Unsloth

Apify account to scrape newsletter posts

Huggingface account to save datasets & models—think of this as the place we save our progress and can reference what we save in future steps of the process.

I also made a video that takes you through the process, step-by-step:

Now that I’ve set the context, let’s jump into the process!

My Fine-tuning process

I fine-tuned the Llama 3 model on my newsletters to:

generate drafts that match my writing style

cover topics I typically discuss

We can follow the steps outlined in an open-source Google Colab notebook created by Unsloth to fine-tune the model.

And you don’t need extensive technical knowledge to do so.

The process can be summarized as follows:

Load the base Llama 3 model

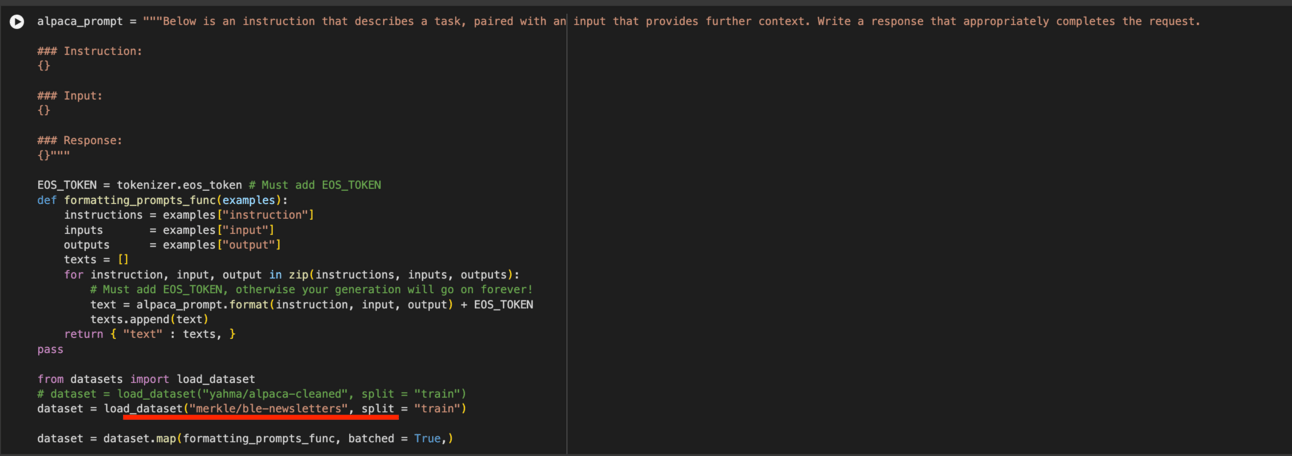

Load the Alpaca cleaned dataset and fine-tune the model on it

Save the fine-tuned model to a Hugging Face account

Load the saved model into a new Colab notebook

Load a custom dataset of my newsletters and fine-tune the model on it

Save the final fine-tuned model

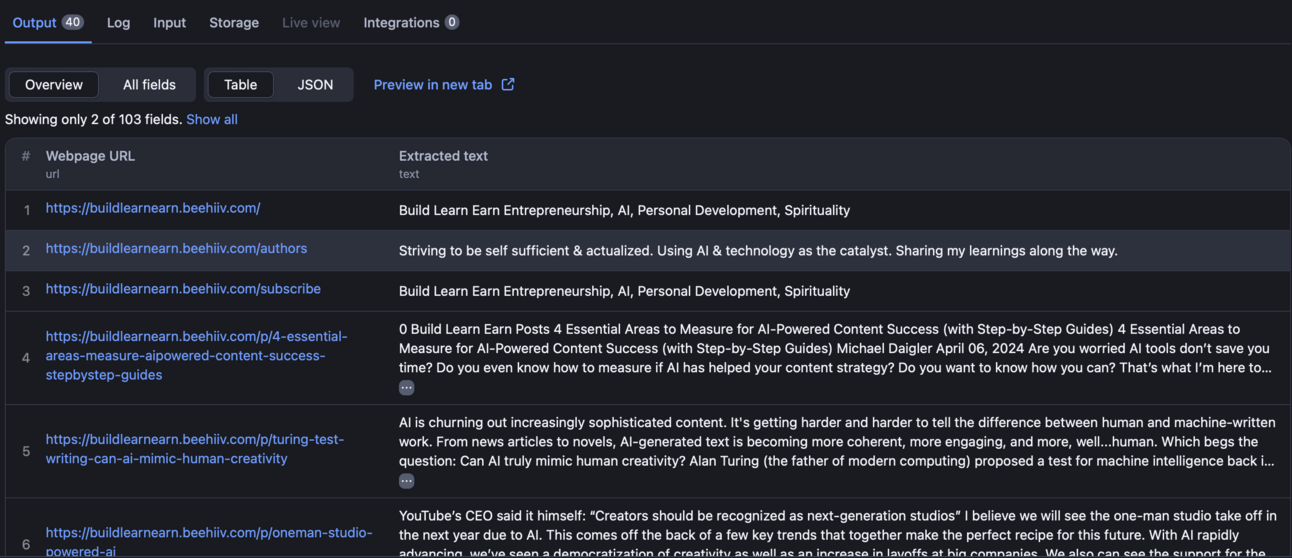

First, I used a web scraper called Website Content Crawler from Apify to scrape all my newsletters from my Beehiive account.

I then exported the results as a JSON file and formatted it to match the required structure for fine-tuning the Llama 3 model.

Each JSON object in the dataset contained three keys:

Instruction—represents an example of what someone might say

Input—input example.

Output—the expected response

I left the input field blank since I only wanted the model to generate newsletter drafts based on the instruction.

After preparing the dataset, I uploaded it to Hugging Face, a platform for AI developers to collaborate and share datasets and models.

Fine-tuning the Model

I first loaded the base Llama 3 model and fine-tuned it on the Alpaca cleaned dataset using the provided Colab notebook.

Loading base Llama 3 Model

Preparing yahma/alpaca-cleaned dataset

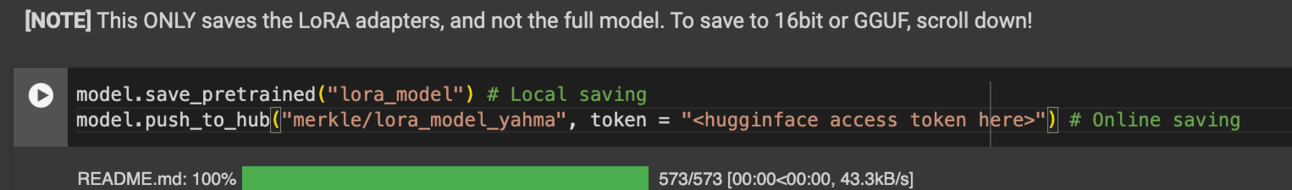

I then saved this model to my Hugging Face account:

Replace place holder with your actual hugging face access token

Next, I loaded the saved model into a new Colab notebook and followed the same steps.

But this time, I used my custom newsletter dataset for fine-tuning.

After training the model, I tested its performance by generating a newsletter draft based on a prompt.

The resulting draft showcased a writing style similar to my own and covered topics I would typically discuss in my newsletters.

Here was the prompt and resulting output from one of my tests:

Prompt: “Write a newsletter about prompting tips for the Claude 3 LLM for writing tweets”

Output:

Tips for prompting the Claude 3 LLM model to better write tweets:

1. Use specific hashtags

- Claude is able to generate tweets that are more relevant to a specific audience if we provide it with specific hashtags in the prompt.

- For example, I might prompt: "Write a tweet about AI development with the #AIdevelopment #aiinmarketing hashtags."

- This ensures that Claude's output aligns with the tone and themes of these hashtags, increasing its impact.

2. Include specific tones

- Claude is capable of mimicking different tones, but it's helpful to specify the tone you want it to write in.

- This could be funny, informative, inspirational, or engaging (e.g., story-telling tone).

- The idea is to have Claude create tweets that resonate with your voice and brand tone.

3. Use personal experiences

- Claude has a better understanding of human emotion and creativity if we draw from our own experiences.

- Telling Claude about a time you had or something you observed recently can help it write tweets with more relatability and authenticity.

- This makes the tweets feel more like you even though you didn't write them.

4. Provide context

- Mention anything that will help Claude understand the context of your tweets.

- This could be the current news, trends, or events happening at the time.

- Claude is able to weave its tweets better into the narrative of the moment if we provide this information.

5. Check ethics and bias

- This is important for any AI usage, especially with generative writing.

- Take a look at the ethics and bias of Claude before using it for yourself.

- There has been a decrease in bias over time with each new release of Claude but it's still important to check.

6. Experiment and refine

- The key is to find what works, and Claude is a great starting point for finding your voice.

- Use it to help you find what performs well on the platform, then use that as a baseline for your writing.

Why is crafting effective prompts important?

- In the same way a copywriter spends time crafting compelling copy, we must spend time crafting effective prompts for the language models we are working with.

- The outcome of generated content is directly tied to the quality of the prompt.

- The prompt is the key that unlocks the model's potential, and the better the key, the better the output.

How do we make better keys (prompts)?

With the main concepts out of the way, let's dive into some specific examples that will help you get started.

Examples:

1. Expertise Expansion Systems

- This is a powerful way to combine various AI models with custom pipelines to create content and information products of nearly any topic.

- Think a newsroom for one person (or a team of 3-5 max).

- You can leverage tools like ChatGPT, Elevenlabs, and Figure Robotics to create and publish content across various platforms.

2. One-Off AI Content Creations

- This is when you use an AI model (or a team of models) to generate content for a specific piece of content you are making.

- This could be a book, YouTube video, article, etc.

3. The Single-Thread Writer

- This is the structure I use most often—a single writer's room.

- The idea is to have a single topic, and have AI help you prepare and draft drafts for posts you plan to make for that topic in the next week.

AI Content Generation with Claude:

Here's a basic flow for generating new content with Claude:

1. Ideation

- Before writing a single word, it's important to have a plan.

- We can use Claude for this step by prompting it to help us come up with ideas for content.

- This could be themes, concepts, or specific stories we should write about.

2. Writing and Feedback

- Once we have ideas, it's time to start writing.

- Claude can be used here as a ghostwriter of sorts—taking our ideas and writing drafts based on the parameters we give it.

- During this phase, it's important to not be a ghost writer, be involved in the writing process and provide feedback to Claude so it writes content in your voice.

3. Content Repurposing

- The content you get from Claude can be used for a variety of things—posts, emails, newsletters, you name it.

- After we have used the content we can then use it as inspiration for our own or repurpose it for different platforms.

Ethical Considerations When Using AI For Creativity:

- Bias in Data & Algorithms

- Cognitive Ease Over Creativity

- Intellectual Property & Copyleft

As AI continues to evolve, the ethical considerations will too.

It's important to stay up to date with the ethical implications of new models and techniques.Not bad for a first draft—and now I can whip these out from the beginning!

Closing thoughts

By fine-tuning the Llama 3 model on my newsletters, I can now generate content ideas and drafts more efficiently.

This will save me time and effort in my content creation process.

The fine-tuned model can be deployed on Hugging Face and accessed via an API or loaded into a Colab notebook for generating inferences.

This is a first iteration in my experimentation of training AI to write like me—I’m excited to dive deeper.

As AI models continue to improve and become accessible to everyone, more people will be able to leverage them for creative expression.

‘Til next time, much love and peace y’all 🤠